Digital health interventions (DHIs) are transforming how we support people given their accessibility, flexibility, and reach across geographic boundaries. Most importantly, DHIs offer a private and personalized space to address health and social needs. Users can ask questions, receive support, and engage with content in ways that feel safe and empowering.

Beyond their convenience, DHIs may be incredibly valuable for individuals who may feel stigmatized or hesitant to seek help in traditional healthcare settings. For instance, DHIs are proving to be powerful tools for behavior change and health promotion among sexual and gender minority youth who often face barriers to care.

Designing for Impact: Why Evaluation Gets Tricky

Digital health tools hold incredible promise, but figuring out whether they really work isn’t as straightforward as it might seem. Imagine you download a new health app. You open it once, poke around, and then forget about it. Compare that to someone else who logs in every day, completes all the lessons, and really digs into the features. Both users technically “used” the app, but their experiences are worlds apart. If we simply group all users together and assume they had the same experience, we risk drawing the wrong conclusions. That difference is what researchers call engagement.

Traditional studies compare a group that gets the program to a group that doesn’t—great when attendance is tracked, like an in-person class. But digital programs are different: downloading doesn’t equal doing. Sometimes they may start a session but get pulled away by a text, alarm, or another app’s notification. That’s why we ask not just “Did someone have access?”, but “How did they actually use it?”

Engagement: At the Heart of Digital Health’s Impact

In digital health, engagement isn’t about signing up, it’s about how you interact with the program: do you come back often? Do you spend time with it? Do you explore deeply or just skim the surface?

The good news is that digital programs can track this automatically through what’s called paradata: the behind-the-scenes information about how people interact with the platform. Paradata can be examined in four main dimensions as suggested by Hightow-Weidman and Bauermeister:

- Amount: How many lessons or activities does someone complete?

- Frequency: How often do they come back?

- Duration: How long do they spend using a program feature?

- Depth: How much do they explore beyond the basic content?

These measures tell us much more than just “did someone use it or not?” They help uncover why and how a digital tool makes a difference. By looking closely at patterns of use, we can see whether the program is reaching people, holding their attention, and ultimately making an impact on health.

Does Engagement Really Matter?

Think of digital health like the gym. Signing up is the first step; results come from showing up. Across Eidos projects, higher engagement is consistently linked with better outcomes:

- STARS: Emerging adults who leaned into the app and peer sessions were more likely to put their safety plans into action during high-stress moments.

- myDEx: Young gay and bisexual men who returned for more sessions reported lower internalized stigma.

- HealthMpowerment.org: About an hour of use across a year was linked with safer sexual behaviors among young Black men.

- imi: LGBTQ+ youth who explored more deeply reported stronger coping and confidence.

The pattern is clear: meaningful engagement is the workout that builds impact.

From Evaluation to Implementation: What’s Next?

Engagement data isn’t just a scorecard—it’s a roadmap. It helps us:

- Time nudges and booster messages when attention naturally dips

- See which features drive change (and which to simplify)

- Understand whether engagement fuels behavior change, or whether feeling better keeps people engaged

- Personalize support so tools meet people where they are

This is how we move from “Does it work?” to “How do we make it work better for more people?” These insights help streamline what works, spark innovation, and lead to more effective, user-centered digital health solutions. Ultimately, this ensures users can fully benefit from the potential of these powerful digital tools.

A New Study to Push the Field Forward

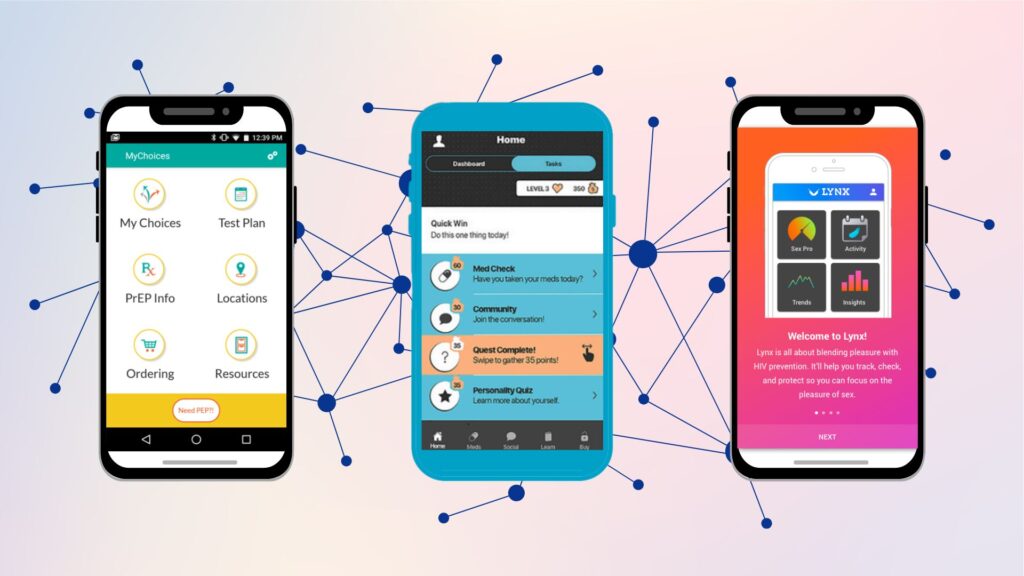

To dive deeper into these questions, a new study funded by the National Institute of Mental Health is now underway. Led by Dr. Seul Ki Choi (Director of Science at Eidos), Dr. José Bauermeister (Founding Director of Eidos), and Drs Hightow-Weidman and Muessig at the Institute for Digital Health & Innovation (IDHI) at Florida State University, this project is analyzing engagement across five iTech trials: MyChoices, LYNX, TechStep, Get Connected, and P3.

These studies, part of the Adolescent Medicine Trials Network for HIV Interventions (ATN), focused on HIV prevention and care for at-risk youth in the U.S.

A key challenge in this work is that each digital platform collects and structures paradata in slightly different ways. One app may track time spent, another may emphasize log-ins, while another highlights completed activities. Without harmonization, it becomes difficult to compare across studies or know which forms of engagement truly matter most for outcomes.

This two-year project addresses that gap by working to:

- Identify patterns of engagement across different interventions.

- Harmonize engagement measures across platforms to enable platform-agnostic evaluation.

- Examine how engagement relates to HIV prevention and care outcomes.

- Explore how engagement evolves over time and influences behavior change.

What sets this study apart is its cross-study comparison: a first-of-its-kind effort to create and test common engagement metrics across five different digital trials. By looking across platforms, the team aims to move beyond surface-level, app-specific metrics and establish principles for evaluating engagement in ways that are broadly applicable.

Analyzing engagement longitudinally also allows researchers to uncover when and how use makes a difference, helping determine the optimal level of engagement needed for meaningful outcomes, spotting when engagement drops off, and identifying the best moments to re-engage users. These insights will inform just-in-time strategies and help shape future digital health interventions so they are more tailored, responsive, and impactful.

Using data from a multicenter HIV research network, the findings will inform future implementation strategies aimed at improving engagement in digital HIV interventions. Ultimately, this project will provide the field with a roadmap for measuring engagement in consistent, platform-agnostic ways-an essential step toward ensuring that digital health tools can truly reduce HIV risks for youth.

Final Thoughts

Digital health interventions hold incredible promise, but to unlock their full potential, we need to understand how users engage with them. Engagement isn’t just a side metric; it’s central to designing smarter tools, improving outcomes, and reducing health disparities. By focusing on engagement, we can design smarter tools, deliver better outcomes, and ultimately reduce health disparities among youth and other marginalized populations.

Have thoughts and questions about digital engagement or DHI’s? Get in touch with Dr. Choi at skchoi@upenn.edu.